Data Minimization: Technical Implementation Strategies That Actually Work (2025 Guide)

Discover how to move beyond data minimization as an abstract principle and implement concrete technical strategies that reduce privacy risk while maintaining business functionality. Learn the five-layer framework that makes minimization systematic, not aspirational—plus the critical decision of when manual processes need to give way to automated enforcement.

I recently worked with a SaaS company that had a fascinating problem. Their privacy policy proudly declared they "collect only data necessary for service delivery." Meanwhile, their registration form collected 23 fields of information—including mother's maiden name—to create a project management account.

When I asked why they needed a mother's maiden name, the CTO said, "Security questions for password resets." When I asked why they needed those instead of email-based resets, he paused. "That's... just how we've always done it."

That's the data minimization challenge in a nutshell. Everyone agrees it's important. Article 5(1)(c) of GDPR requires it. CCPA reinforces it. But translating "collect only what's necessary" into technical reality? That's where most businesses struggle.

This guide bridges that gap. You'll learn practical, implementable strategies that transform data minimization from a compliance checkbox into systematic practice.

What Data Minimization Really Means in Practice (Beyond the Legal Definition)

The legal definition is straightforward: personal data must be "adequate, relevant, and limited to what is necessary" for your stated purposes. The implementation reality is more nuanced.

Data minimization doesn't mean collecting the absolute minimum data to keep your business functioning. It means collecting the minimum data needed for your explicitly stated purposes. That's a critical distinction.

Here's what this looks like in practice:

If your stated purpose is "process customer orders," you need:

- Name for shipping labels

- Address for delivery

- Payment information for transactions

- Email for order confirmation

You don't need:

- Date of birth (unless selling age-restricted products)

- Phone number (unless offering SMS notifications they opted into)

- Social security number (ever, for e-commerce)

- Gender or demographic information (unless directly relevant to product fit)

The difference between what you could find useful and what you actually need for your stated purpose is where data minimization lives.

The Three Common Misconceptions

Misconception #1: "We might need it later"

I hear this constantly. "What if we launch a birthday discount program next year? We should collect birth dates now."

No. Collect birth dates when—and if—you launch that program. Better yet, make it optional even then. Data you don't have can't be breached, can't create privacy obligations, and can't become a regulatory headache.

Misconception #2: "More data means better analytics"

Actually, focused data often produces better insights. When you're drowning in 50 customer attributes, it's harder to identify the 5 that actually predict behavior. Data minimization forces analytical discipline—collect data with specific questions in mind, not "just in case."

Misconception #3: "Minimization means we can't personalize"

Minimization means purposeful personalization, not generic experiences. If your purpose includes "provide personalized recommendations," you can collect preference data. You just need to state that purpose clearly and collect only what serves it.

The key is alignment: your data collection must match your stated purposes, and your stated purposes must justify each data element.

The Five-Layer Data Minimization Framework

Most data minimization guides focus on collection—don't collect unnecessary data. True minimization operates across five distinct layers. Here's the framework I use with clients:

Layer 1: Collection Limitation

This is your first line of defense. Every data point you don't collect is one you don't need to protect, retain, or potentially delete in response to a rights request.

Technical controls:

- Form field validation that rejects unnecessary inputs

- API endpoint restrictions that refuse additional parameters

- Default configurations that disable optional data capture

- Progressive disclosure that reveals collection only when needed

Layer 2: Processing Constraints

Once data enters your systems, how it's used matters as much as what was collected.

Technical controls:

- Purpose flags that tag data with allowed use cases

- Access controls that restrict processing to stated purposes

- Automated flags when data is accessed outside normal patterns

- Processing logs that create accountability trails

Layer 3: Storage Optimization

Data doesn't need to live forever. Minimization extends to how long you retain information and in what form.

Technical controls:

- Automated retention schedules by data category

- Archival policies that move data to restricted storage

- Pseudonymization for data that must be retained long-term

- Compression techniques that reduce storage footprints

Layer 4: Sharing Restrictions

Every time data leaves your control, minimization principles apply to what you share and who receives it.

Technical controls:

- Data export filters that limit shared fields

- Third-party access scopes that enforce need-to-know

- Contractual provisions requiring minimization by recipients

- Monitoring systems that flag unusual sharing patterns

Layer 5: Disposal Automation

The final layer ensures data that's served its purpose doesn't linger indefinitely.

Technical controls:

- Scheduled deletion jobs by retention period

- Secure deletion standards (overwriting, not just deletion flags)

- Automated audit trails proving deletion occurred

- Exception handling for legal holds and active disputes

When you implement across all five layers, data minimization becomes systematic rather than aspirational.

Technical Strategy #1: Collection Limitation by Design

Let's get specific. How do you actually implement collection limitation in your forms, APIs, and user interfaces?

The Form Field Audit Process

Start by listing every field in every data collection point—registration forms, checkout processes, contact forms, account settings, everything.

For each field, ask three questions:

- What purpose does this serve? Write the specific business function it enables.

- Is this purpose stated in our privacy documentation? If not, either add the purpose or remove the field.

- Can we accomplish this purpose without this data? Be honest here. Often the answer is yes.

I recently did this exercise with an e-commerce client. Their checkout form collected:

- Full name ✓ (Needed for shipping)

- Email ✓ (Needed for order confirmation)

- Phone number ✗ (Optional for delivery updates—make it optional)

- Address ✓ (Needed for shipping)

- Company name ✗ (Only needed for B2B orders—conditionally display)

- Tax ID ✗ (Only needed for tax-exempt orders—conditionally display)

- Date of birth ✗ (No stated purpose—remove entirely)

- Gender ✗ (No stated purpose—remove entirely)

That simple audit eliminated 4 fields and made 2 optional, reducing their collection by 40%.

Progressive Disclosure Techniques

Don't ask for data until you need it. This technique is powerful for minimization because it prevents speculative data collection.

Example implementation:

Instead of asking for phone numbers at registration "in case we need to contact them," implement this flow:

- User registers with email only

- User initiates a shipping order → Now prompt for phone number as optional for delivery updates

- User opts into SMS notifications → Now phone number becomes required for that specific feature

You've transformed a universal requirement into a contextual, purpose-driven request.

Default Settings That Favor Minimization

Here's where I see businesses trip up constantly: they design for maximum data collection and make users opt out.

Flip this. Your defaults should minimize data collection, with users opting in to additional data sharing.

Example:

Bad default: Newsletter subscription is pre-checked, collecting email marketing consent silently.

Good default: Newsletter subscription is unchecked. Users actively opt in, creating clear evidence of specific consent.

Another example:

Bad default: "Share data with partners for personalized offers" is enabled by default.

Good default: All third-party sharing is disabled. Users can enable categories they want.

This approach isn't just better privacy—it often improves data quality. Users who actively opt in are genuinely interested, reducing list decay and improving engagement metrics.

Making Optional Fields Actually Optional

I put this separately because it's critical: if you mark a field as optional, your system must function without it.

I've audited dozens of forms where "optional" fields are optional only in the UI. Backend validation throws errors if they're missing. Order processing fails. Account creation breaks.

Test every optional field by submitting forms without those fields filled. If anything breaks, either fix the backend logic or reclassify the field as required (and then ask if it should exist at all).

Technical Strategy #2: Automated Retention and Deletion

Collection limitation addresses data entering your systems. Retention addresses how long it stays. This is where manual processes break down and automation becomes essential.

Building Retention Schedules by Data Category

Not all data ages at the same rate. Your customer service chat logs have different retention needs than your financial transaction records.

Start by categorizing data:

Category 1: Active Transaction Data

- Customer orders, payment records, shipping information

- Retention: Typically 7 years for financial/tax purposes

- Rationale: Legal obligation

Category 2: Account Data

- User profiles, preferences, login credentials

- Retention: Duration of active account + 90 days

- Rationale: Service delivery necessity

Category 3: Marketing/Communications Data

- Email preferences, newsletter subscriptions, consent records

- Retention: Until consent withdrawal + 90 days for proof of consent

- Rationale: Legitimate interest in proving compliance

Category 4: Support Interactions

- Chat logs, support tickets, feedback

- Retention: 2 years after case closure

- Rationale: Service improvement and dispute resolution

Category 5: Analytics/Behavioral Data

- Click streams, page views, session data

- Retention: 12 months, then aggregate/anonymize

- Rationale: Service improvement

Your categories will differ based on your business model, but the principle remains: define retention by purpose, not convenience.

Automated Deletion Workflows

Once you've defined retention periods, manual deletion is unsustainable. Here's why:

If you collect 1,000 new customer records monthly and each requires individual review for deletion eligibility, you're looking at 12,000 annual review decisions. At 5 minutes per review (checking account status, verifying retention period, confirming no legal holds), that's 1,000 hours of manual work.

Automation isn't optional at scale—it's the only viable approach.

Basic automated workflow:

1. Nightly job identifies records past retention dates

2. Checks for exception conditions (active disputes, legal holds)

3. Creates deletion queue with 30-day review period

4. Sends notification to data protection team

5. If no objection within 30 days, executes secure deletion

6. Logs deletion with timestamp and record identifier

The 30-day review period is your safety net. It gives you time to identify records that shouldn't be deleted (active customer reaching out after account closure, ongoing investigation, etc.) while maintaining systematic deletion as the default.

Archival vs. Deletion Decisions

Sometimes you need long-term data for legitimate purposes but can minimize privacy risk through archival.

Archival makes sense when:

- Legal obligations require retention (financial records, employment data)

- Data serves legitimate business interests (fraud prevention, warranty support)

- Statistical purposes justify retention but individual identification isn't needed

Archival strategies:

-

Cold storage with restricted access: Move data to separate systems with enhanced access controls. Only authorized users for specific purposes can retrieve it.

-

Pseudonymization: Replace direct identifiers with pseudonyms. You can still access the data for analytics or legal purposes, but it's no longer easily linkable to individuals.

-

Aggregation: Transform individual records into statistical summaries. "Customer #12345 purchased 3 items" becomes "March 2024: 847 customers purchased 3+ items."

The key question: Could we accomplish our legitimate purpose with less identifiable data? If yes, archive don't just retain.

Legal Hold Considerations

One complexity: sometimes you can't delete data even when retention periods expire.

Legal holds occur when:

- You're party to active litigation

- Regulatory investigations are ongoing

- You receive a preservation notice from counsel or authorities

- You anticipate litigation and data is potentially relevant

Your automated deletion system must include hold management:

Hold implementation:

- Maintain a legal holds registry (active cases, preservation scope)

- Tag affected records with hold flags

- Exclude held records from automated deletion

- Review holds quarterly and release when appropriate

- Resume normal retention schedules after hold release

This is another area where privacy risk assessment methodologies become valuable—identifying when litigation risk requires preservation helps you balance minimization with legal prudence.

Technical Strategy #3: Purpose-Based Access Controls

Data minimization isn't just about external collection—it's about internal access. Who in your organization can access what data, and why?

The Need-to-Know Principle in Practice

Every person with data access should have it because their job function requires it, not because it's technically convenient to give everyone access to everything.

Role-based access implementation:

Customer Support Role

- Access: Customer contact information, order history, support ticket contents

- No access: Payment details, internal notes, aggregate analytics, full customer list

Marketing Role

- Access: Email addresses (for opted-in contacts), campaign performance data, aggregated demographics

- No access: Individual browsing behavior, payment information, support interactions

Finance Role

- Access: Payment information, transaction records, billing addresses

- No access: Marketing preferences, support communications, product usage data

Engineering Role

- Access: System logs, performance metrics, anonymized error reports

- No access: Personal identifiers in production databases, customer contact information

The principle: grant access to data categories, not universal database access.

Audit Logging for Access Monitoring

Purpose-based controls only work if you can verify they're being followed. Audit logs create accountability.

What to log:

- Who accessed which data categories

- When access occurred

- What action was performed (view, edit, export, delete)

- What business purpose justified the access

Red flags to monitor:

- Access patterns that don't match job function

- Bulk exports of personal data

- After-hours access without explanation

- Repeated access to specific individual records (potential stalking scenario)

I've worked with companies where audit log review identified sales reps searching for ex-partners in the customer database, marketing employees accessing competitors' accounts, and admins browsing celebrity customer records.

These aren't just privacy violations—they're data breaches under most regulations. Monitoring catches them before they become regulatory nightmares.

Cross-Functional Data Access Policies

The hardest access control decisions aren't within departments—they're between them.

Common scenario:

Marketing wants to analyze customer support interactions to improve messaging. Support data contains personal information (complaints, account details, sometimes sensitive information).

Bad solution: Give marketing direct database access.

Good solution: Create an automated pipeline that:

- Extracts support data

- Removes personal identifiers (names, emails, account numbers)

- Categorizes by issue type and outcome

- Provides marketing with aggregated insights

Marketing gets what they need (understanding of customer pain points), support maintains data protection, and you've minimized processing in the middle.

This approach aligns perfectly with Privacy by Design principles—you're building minimization into your business processes, not layering it on afterward.

Technical Strategy #4: Documentation That Reflects Reality

Here's an uncomfortable truth: most privacy documentation lies by omission or outdated information.

Your privacy policy says you "collect minimal personal information." Your registration form collects 19 fields. That's not minimal by any reasonable definition, and it's definitely not an accurate description of your practices.

Data minimization isn't real unless your documentation reflects it.

Mapping Data Minimization in Your ROPA

Your Records of Processing Activities (ROPA) under GDPR Article 30 must describe what data you collect and why. This is where minimization meets documentation.

For each processing activity, document:

- Data categories collected (be specific: "customer email addresses" not "contact information")

- Purpose for each category (be precise: "order confirmation emails" not "customer communications")

- Retention period (be definite: "2 years after last purchase" not "as long as necessary")

- Minimization rationale (be explicit: "email required for order confirmation; phone optional for delivery updates")

This level of detail does two things:

First, it forces you to actually think through whether you need each data element. Writing "we collect postal codes to... uh..." makes you realize you might not have a good reason.

Second, it creates a defensible record during audits. When regulators ask "why do you collect X?", you have documented justification rather than improvising answers.

Privacy Policy Disclosures

Your privacy policy needs to go beyond generic statements about minimization. Be specific about your practices.

Generic (problematic) disclosure: "We collect only personal information necessary for our business purposes."

Specific (defensible) disclosure: "We collect your name and email address to create your account and send order confirmations. We collect your shipping address only when you place an order. We do not collect demographic information, browsing history on other sites, or location data beyond what you provide during checkout."

The specific version tells users exactly what you do and don't collect. It sets clear expectations. And critically, it's verifiable—auditors can test whether your actual collection matches your stated practices.

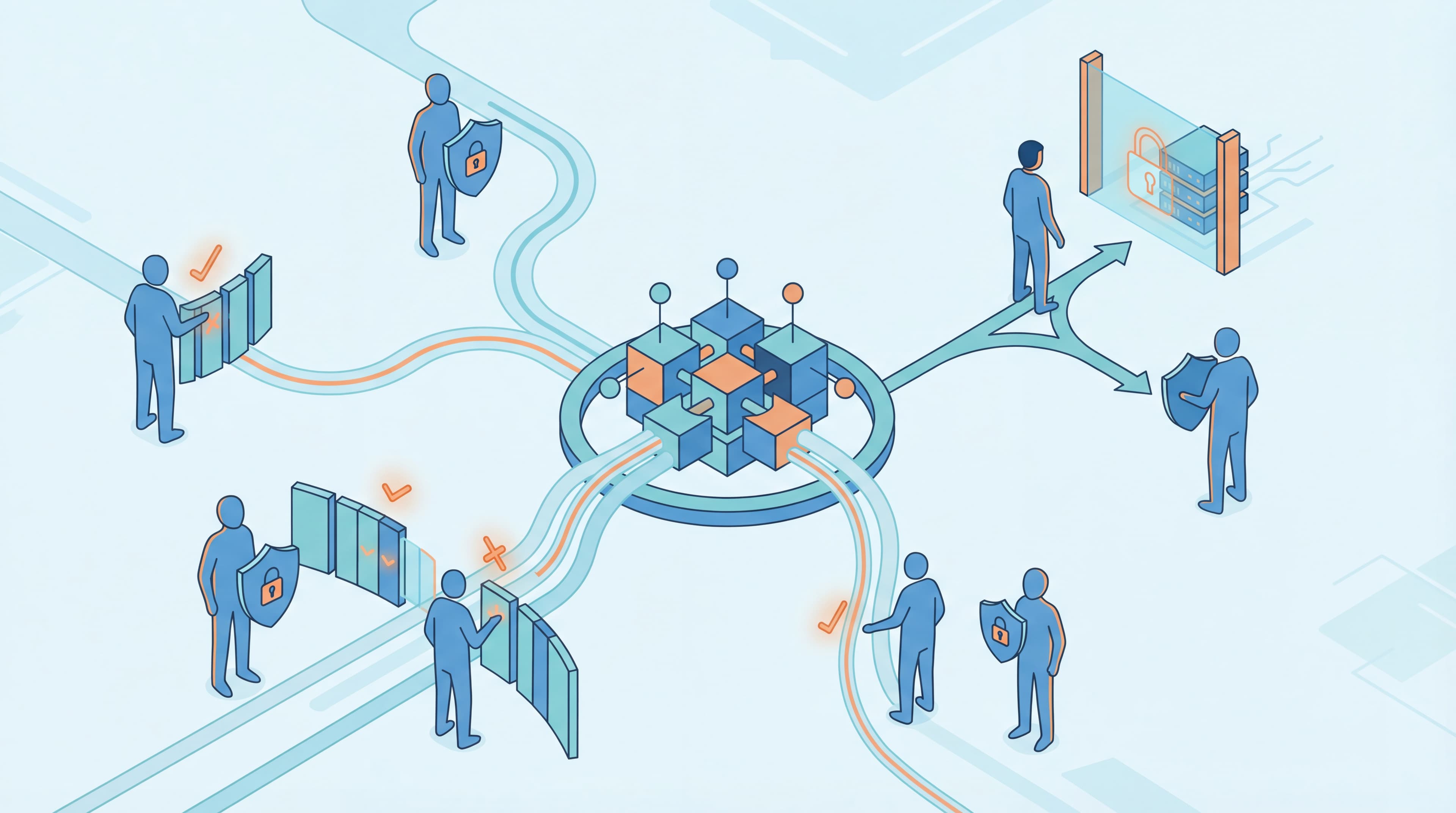

Data Flow Diagrams That Show Minimization

Visual documentation matters, especially for complex businesses with multiple data flows.

A data flow diagram should show:

- Collection points (website forms, APIs, third-party integrations)

- Data types at each point

- Processing activities (what you do with the data)

- Storage locations (databases, cloud services)

- Third-party transfers (who else receives data)

- Deletion points (when data exits your systems)

Minimization should be visible in the diagram—fewer data types, shorter retention arrows, limited third-party connections. If your diagram looks like a spider web with data going everywhere, that's a red flag that minimization isn't happening.

Proving Minimization During Audits

When regulators or auditors review your privacy practices, they'll look for evidence of minimization, not just claims about it.

Audit-ready evidence includes:

- Form field audit documentation with justifications

- Retention schedules with date-based deletion logs

- Access control configurations showing role-based restrictions

- Examples of data requests showing only necessary information was accessed

- Change logs showing when you eliminated unnecessary collection

The gold standard: your privacy documentation generates itself from your actual systems. When collection changes, documentation updates automatically. When retention periods adjust, policies reflect it immediately.

That level of synchronization between practice and documentation is exactly what modern privacy platforms enable—and why manual documentation becomes unsustainable as you scale.

The Tools You Need (And When Automation Becomes Essential)

Let's talk practically about implementation. When can you handle data minimization manually, and when do you need automated tools?

Manual Approaches for Early-Stage Businesses

If you're a small business with:

- Fewer than 1,000 customer records

- Simple data collection (name, email, maybe address)

- Single-purpose use case (e.g., newsletter only)

- No complex third-party integrations

Manual minimization is viable:

What manual looks like:

- Spreadsheet tracking data categories and purposes

- Calendar reminders for quarterly data cleanup

- Manual review of forms before launch

- Documented decisions about what to collect

Time investment: Approximately 4-8 hours monthly for data management, quarterly audits, and documentation updates.

This works when your data footprint is small and relatively static.

When to Invest in Automation

Manual processes break at predictable points:

Signal #1: You can't keep up with deletion requests

If you're manually searching multiple systems for customer data when someone requests deletion, taking days to fulfill requests, you've hit the automation inflection point.

Signal #2: Your documentation is always outdated

If your privacy policy says one thing but your current practices are different because you haven't had time to update documentation, manual processes have failed.

Signal #3: You're collecting data "just in case"

When your team defaults to collecting more data because it's easier than figuring out what's necessary, you need automated enforcement of minimization rules.

Signal #4: Compliance feels reactive, not systematic

If you're addressing privacy obligations only when they become urgent (audit notice, customer complaint, regulatory inquiry), manual approaches aren't scaling with your business.

Any of these signals means it's time to consider automation.

How Platforms Like PrivacyForge Enforce Minimization Through Documentation

Here's where I need to be direct about how modern privacy platforms approach this problem—because it illustrates why documentation-first solutions align naturally with minimization.

Traditional approach: Build technical systems, then try to document them accurately. Documentation lags behind reality, creating compliance gaps.

Documentation-first approach: Define what data you collect and why, generate documentation from those definitions, then use that documented reality as your implementation guide.

How this enforces minimization:

-

Collection definition forces justification: When you must articulate why you collect each data point to generate your privacy policy, you confront whether you really need it.

-

Purpose binding becomes explicit: Your documented purposes limit what you can do with data. If your privacy policy says "email for order confirmation," using those emails for marketing requires policy updates—creating intentional friction.

-

Retention schedules become commitments: When your policy states specific retention periods, they're not aspirational—they're documented commitments you must honor.

-

Minimization becomes verifiable: Your privacy documentation describes your minimization practices in concrete terms, creating a standard against which your actual practices can be measured.

The key insight: good documentation doesn't just describe minimization—it enforces it by creating accountability between stated practices and technical reality.

Building vs. Buying: Decision Framework

Should you build your own privacy management infrastructure or use a platform?

Build makes sense when:

- You have unique technical requirements that standard platforms don't address

- You have engineering resources available for ongoing maintenance

- Your business processes are highly specialized

- You need deep customization of workflows

Buy makes sense when:

- Your compliance needs are standard (GDPR, CCPA, PIPEDA)

- You need to move quickly without engineering bottlenecks

- You want to focus engineering resources on core product, not compliance infrastructure

- You need documentation that stays current with regulatory changes

For most SMBs, buying is the pragmatic choice. Your engineering team's time is better spent building product features than recreating compliance infrastructure that already exists.

The real question isn't whether platforms are useful—it's which aspects you need automated and which you can manage internally.

Common Data Minimization Mistakes (And How to Avoid Them)

Let me share the patterns I see repeatedly that undermine minimization efforts:

Mistake #1: "We Might Need It Later" Syndrome

The pattern: Team members advocate for collecting additional data fields because "we're considering a feature that might need it."

Why it's problematic: You're creating privacy obligations and risk for hypothetical future needs. If the feature never launches, you've collected unnecessary data permanently.

The fix: Implement a "just-in-time" collection rule. Collect new data categories only when features requiring them are launched, not when they're proposed. Make data collection the final step of feature rollout, not the first.

Mistake #2: Over-Collecting "Just in Case"

The pattern: Forms collect phone numbers "in case we need to contact customers urgently," demographic information "in case we want to analyze customer segments," and secondary email addresses "in case primary emails bounce."

Why it's problematic: "Just in case" isn't a legitimate purpose under GDPR or CCPA. Purposes must be specific, explicit, and legitimate—"we might need it someday" fails all three tests.

The fix: Require documented purposes before any data collection. No field gets added without answering: "What specific business function requires this data, and is that function explicitly disclosed to users?"

Mistake #3: Ignoring Indirect Data Creation

The pattern: Teams focus on form-collected data but ignore logs, analytics, derived attributes, and inferred data your systems generate automatically.

Why it's problematic: Your web server creates IP address logs. Your analytics tools create behavioral profiles. Your recommendation engine creates preference inferences. This is all personal data subject to minimization requirements.

The fix: Audit systems for automatically generated data. Apply the same retention and access controls to server logs as form data. Configure analytics tools to minimize data collection (anonymized IP addresses, reduced cookie duration, limited tracking scope).

Mistake #4: Failing to Minimize in Analytics

The pattern: Analytics platforms collect every possible data point because "more data means better insights."

Why it's problematic: Most analytics questions don't require personal identification. You can answer "What's our conversion rate by traffic source?" without knowing individual identities.

The fix: Implement privacy-preserving analytics:

- Anonymize IP addresses

- Use session-based tracking without cross-site identifiers

- Aggregate data before analysis

- Set short cookie durations (session-only when possible)

- Disable unnecessary tracking features (demographic tracking if you don't need it)

Google Analytics, for example, has IP anonymization, data retention controls, and tracking scope limiters—but they're not default settings. Minimization requires active configuration.

Mistake #5: Universal Access for Convenience

The pattern: Giving all employees access to customer databases because it's easier than setting up role-based access controls.

Why it's problematic: Access minimization is part of data minimization. If someone doesn't need data to do their job, they shouldn't have access to it.

The fix: Start with zero access and add roles as needed. It's more work initially but prevents privacy incidents from unauthorized access. Implement the access controls discussed earlier in this guide.

Mistake #6: Indefinite Retention as Default

The pattern: Data gets stored forever because there's no active decision to delete it. Databases grow continuously with no cleanup.

Why it's problematic: Data you retained when you had 100 customers becomes a massive liability when you have 100,000. Every old record is a potential breach exposure and an ongoing retention violation.

The fix: Implement the automated deletion workflows discussed earlier. Make deletion the default, retention the exception requiring justification.

Your 30-Day Data Minimization Implementation Plan

Theory is useful. Implementation timelines are better. Here's how to execute data minimization systematically over the next month.

Week 1: Data Collection Audit

Day 1-2: Inventory collection points

Create a comprehensive list:

- Website forms (contact, registration, checkout, newsletter signup)

- API endpoints (what data they accept)

- Third-party integrations (what they send you)

- Manual data entry (customer service adding notes)

- Automatically generated data (logs, analytics, system-generated attributes)

Day 3-4: Field-level analysis

For each data point:

- What is collected (be specific)

- Why it's collected (stated purpose)

- Where that purpose is disclosed (privacy policy, consent form)

- Whether collection is required or optional

Day 5: Identify minimization opportunities

Review your analysis for:

- Fields with no clear purpose → Eliminate

- Fields with weak justification → Evaluate whether truly necessary

- Required fields that could be optional → Make them optional

- Data collected "just in case" → Remove unless launching related feature

Day 6-7: Document decisions

Create a minimization action plan:

- Immediate changes (fields to remove this week)

- Short-term changes (fields to make optional, require engineering work)

- Policy changes (updated disclosures, new collection rationales)

Week 2: Retention Policy Creation

Day 8-10: Categorize existing data

Group data by retention requirements:

- Legal retention obligations (financial records, employment data)

- Service delivery needs (active customer accounts)

- Consent-based retention (marketing communications)

- No ongoing purpose (old customer data after account closure)

Day 11-12: Define retention schedules

For each category:

- Retention period (specific timeline: "2 years" not "as needed")

- Retention rationale (legal obligation, legitimate interest, consent)

- Post-retention action (delete, archive, anonymize)

Day 13-14: Document retention policy

Create written policy covering:

- Data categories and their retention periods

- Exception processes (legal holds)

- Deletion procedures (technical steps)

- Review schedule (who checks retention compliance, how often)

Week 3: Technical Implementation

Day 15-17: Collection changes

Implement Week 1 decisions:

- Remove unnecessary form fields

- Update field requirements (required → optional)

- Add progressive disclosure for conditional fields

- Update form validation logic

Day 18-20: Access control implementation

Set up role-based data access:

- Define roles and their data needs

- Configure access controls in systems

- Test access restrictions (ensure they work)

- Document access policies

Day 21: Retention automation setup

Start deletion automation:

- Identify records past retention dates

- Create deletion queue with review period

- Set up automated deletion jobs (nightly/weekly)

- Configure deletion logging

Week 4: Documentation and Training

Day 22-24: Update privacy documentation

Revise key documents to reflect minimization practices:

- Privacy policy updates (specific collection disclosures)

- ROPA updates (data categories, purposes, retention)

- Data flow diagrams (if applicable)

- Internal minimization guidelines

Day 25-26: Team training

Train relevant teams on:

- New collection practices (marketing, product)

- Access control policies (all employees)

- Retention requirements (engineering, data teams)

- Minimization principles (everyone)

Day 27-28: Monitoring setup

Implement ongoing oversight:

- Schedule quarterly data audits

- Set up access log review process

- Create retention compliance checks

- Define minimization KPIs (% optional fields, average retention period, etc.)

Day 29-30: Final review and optimization

Review implementation:

- Test new forms and workflows

- Verify deletion automation works

- Confirm documentation accuracy

- Identify remaining optimization opportunities

At the end of 30 days, you have a functioning data minimization program—not perfect, but systematic and improvable.

The Reality of Sustainable Minimization

Here's what I've learned helping dozens of businesses implement data minimization: perfection isn't the goal, and it's not achievable anyway.

Your business will evolve. You'll launch new features requiring new data. Regulations will change. Technologies will emerge with different data implications.

Sustainable minimization isn't about achieving a perfect minimal state—it's about building systematic practices that question, justify, and optimize data collection continuously.

The practices that matter:

-

Default to minimal: When someone proposes collecting new data, the default response is "why do we need this?" with burden of proof on the proposer.

-

Document everything: Every data category has an articulated purpose. If you can't explain why you collect something, you shouldn't collect it.

-

Automate enforcement: Retention happens automatically. Access controls are technical, not policy-based. Minimization is systematized, not dependent on individual discipline.

-

Review regularly: Quarterly audits catch scope creep, identify deprecated data, and ensure your practices match your documentation.

-

Prioritize maintenance: Privacy isn't a project with an end date—it's an ongoing practice requiring dedicated resources.

The businesses that succeed with minimization treat it like security: a core business practice with dedicated ownership, not a compliance checkbox to complete and forget.

When Manual Processes Aren't Enough Anymore

I want to end with a practical observation: every business I work with eventually hits the point where manual privacy management becomes unsustainable.

The inflection point isn't at a specific size or data volume—it's when your team spends more time documenting compliance than achieving it.

When privacy requests take days instead of hours because you're manually searching systems...

When your documentation is always outdated because updating it requires engineering time you don't have...

When you're collecting data you don't need because saying "no" requires more explanation than saying "yes"...

That's when documentation-first platforms like PrivacyForge become not just convenient, but essential for sustainable compliance.

The promise of automated privacy documentation is simple: your practices and your documentation stay synchronized. When you change collection, your privacy policy updates. When you modify retention, your ROPA reflects it. When you implement minimization, your documentation proves it.

That synchronization—between what you do and what you say you do—is the foundation of defensible data minimization.

Ready to stop managing privacy documentation manually? Start here and see how quickly we can generate privacy documentation that actually reflects your data minimization practices. Get your first privacy policy in minutes, not months—and ensure it stays current as your practices evolve.

Related Articles

Ready to get started?

Generate legally compliant privacy documentation in minutes with our AI-powered tool.

Get Started Today